PosterGen:

Aesthetic-Aware Paper-to-Poster Generation via Multi-Agent LLMs

Zhilin Zhang1,2 ★ Xiang Zhang3 ★ Jiaqi Wei4 Yiwei Xu5 Chenyu You1

1Stony Brook University 2New York University 3University of British Columbia

4Zhejiang University 5University of California, Los Angeles ★Equal Contribution

Abstract

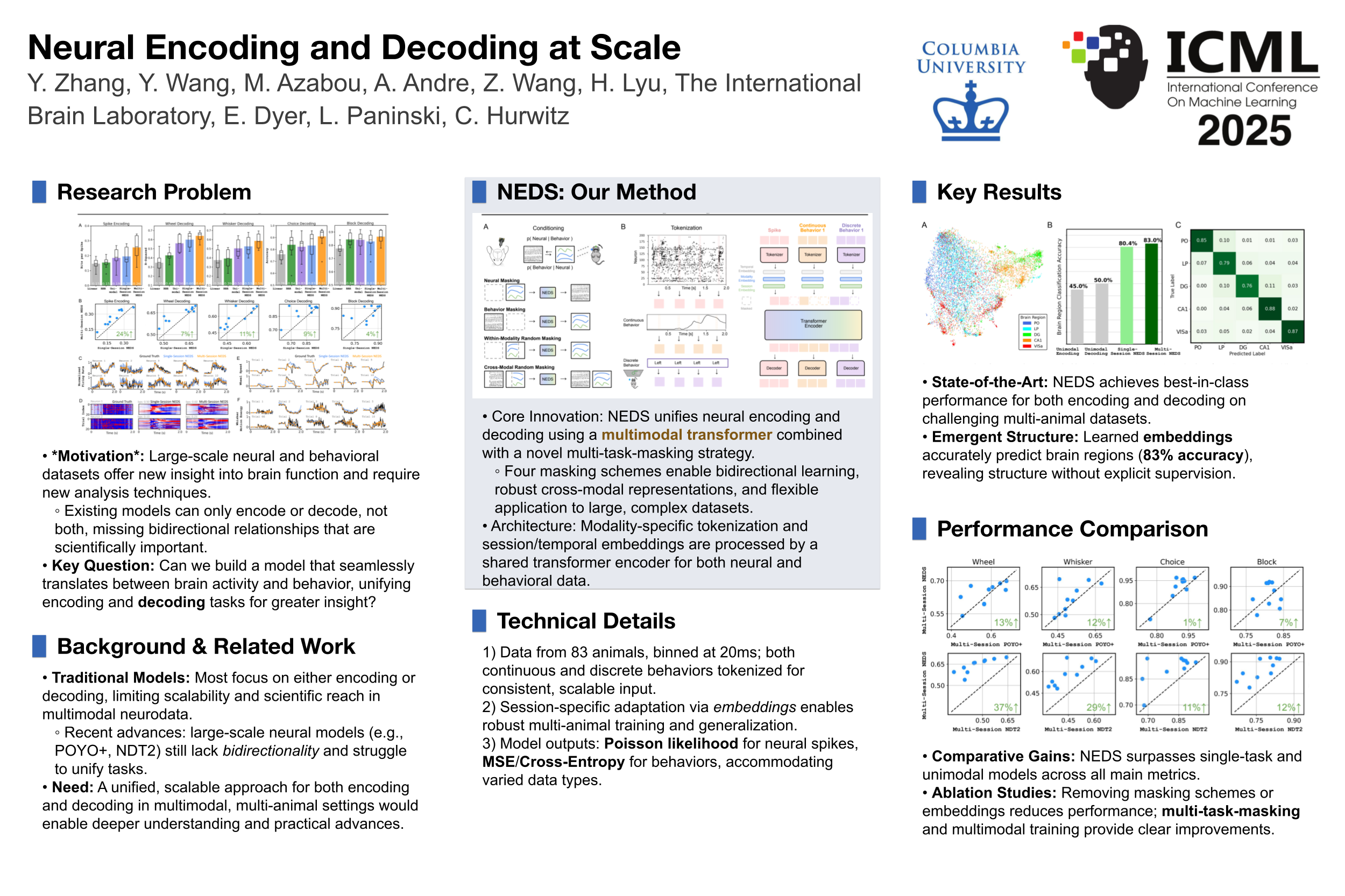

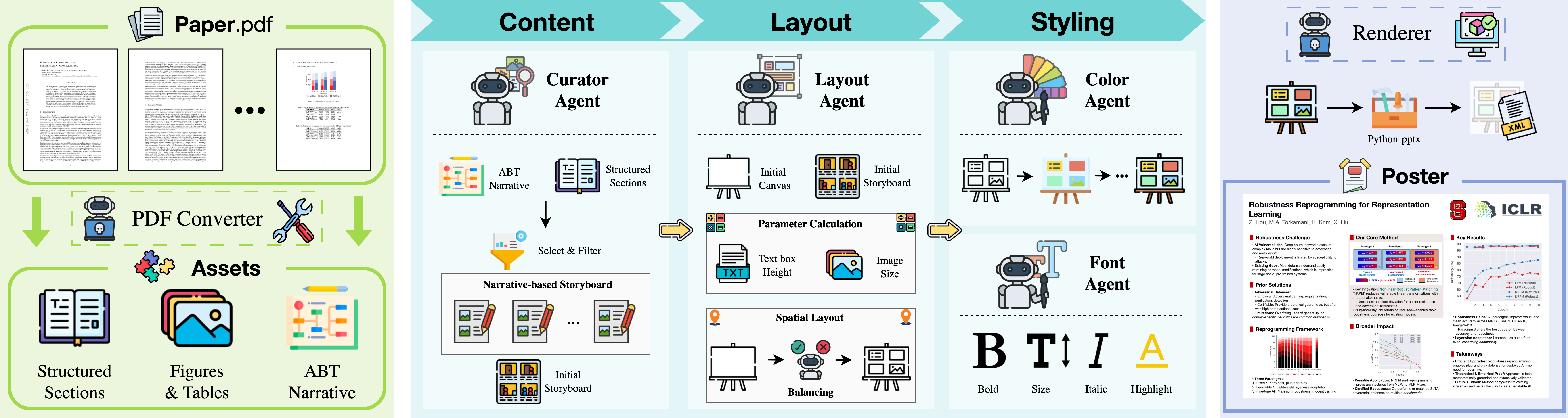

Multi-agent systems built upon large language models (LLMs) have demonstrated remarkable capabilities in tackling complex compositional tasks. In this work, we apply this paradigm to the paper-to-poster generation problem, a practical yet time-consuming process faced by researchers preparing for conferences. While recent approaches have attempted to automate this task, most neglect core design and aesthetic principles, resulting in posters that require substantial manual refinement. To address these design limitations, we propose PosterGen, a multi-agent framework that mirrors the workflow of professional poster designers. It consists of four collaborative specialized agents: (1) Parser and Curator Agents extract content from the paper and organize storyboard; (2) Layout Agent maps the content into a coherent spatial layout; (3) Stylist Agent applies visual design elements such as color and typography; and (4) Renderer composes the final poster. Together, these agents produce posters that are both semantically grounded and visually appealing. To evaluate design quality, we introduce a vision-language model (VLM)-based rubric that measures layout balance, readability, and aesthetic coherence. Experimental results show that PosterGen consistently matches in content fidelity, and significantly outperforms existing methods in visual designs, generating posters that are presentation-ready with minimal human refinements.

Method Overview

1. Parser Agent processes the input paper, extracting all text and visual assets and organizing them into a structured format focusing on an ABT narrative.

2. Aesthetic-Aware LLMs then transform this content into a styled layout: Curator Agent creates a narrative-based storyboard, Layout Agent calculates the precise spatial arrangement and balances the columns, and Styling Agents apply a harmonious color palette and a hierarchical typographic system.

3. Renderer module takes the styled metadata and produces the output poster via python-pptx.

Aesthetic-Aware LLMs

Our method embeds the design principles as core logic within each specialized agent to establish a cascade of structured design constraints.

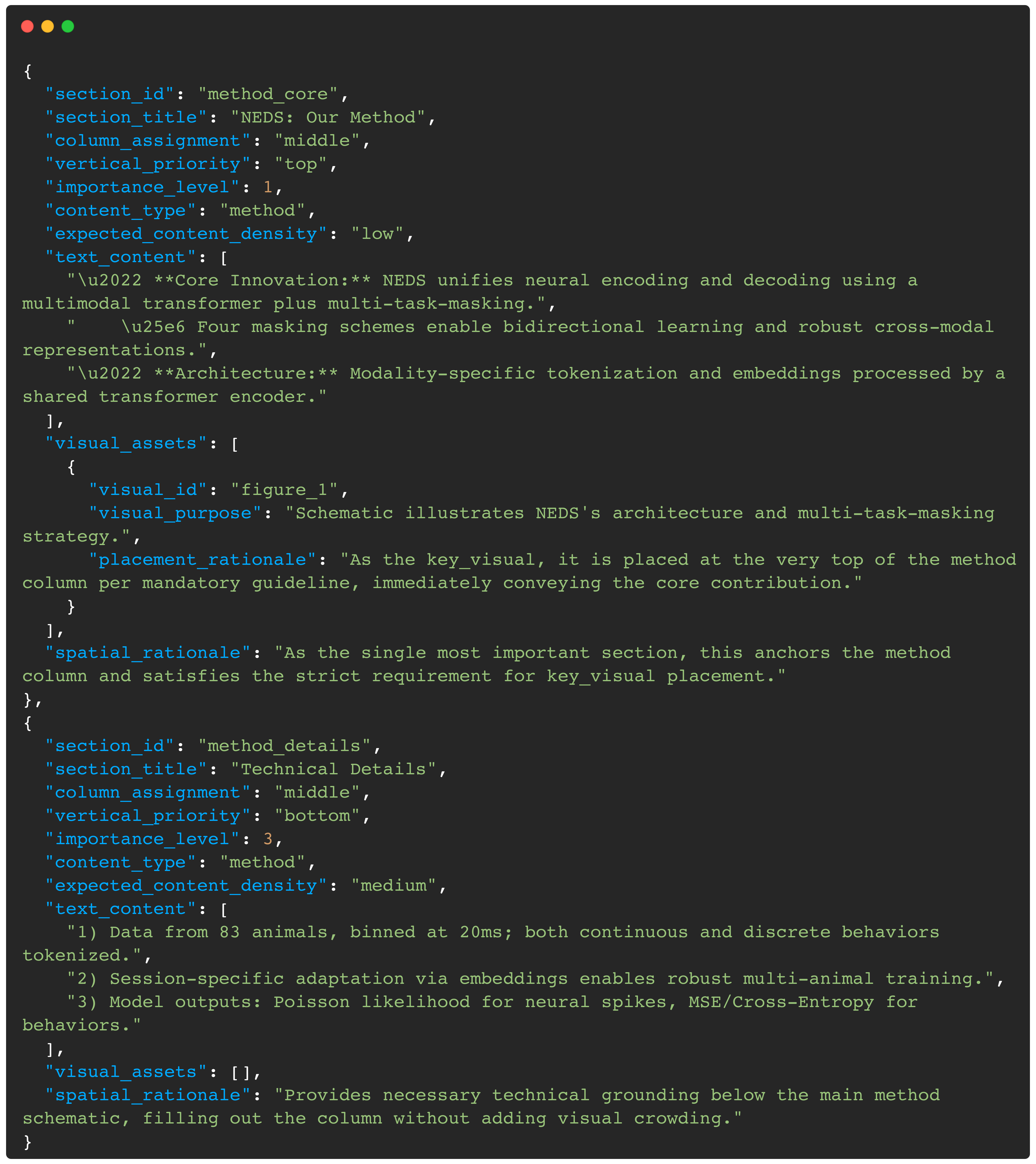

Json-formatted story board

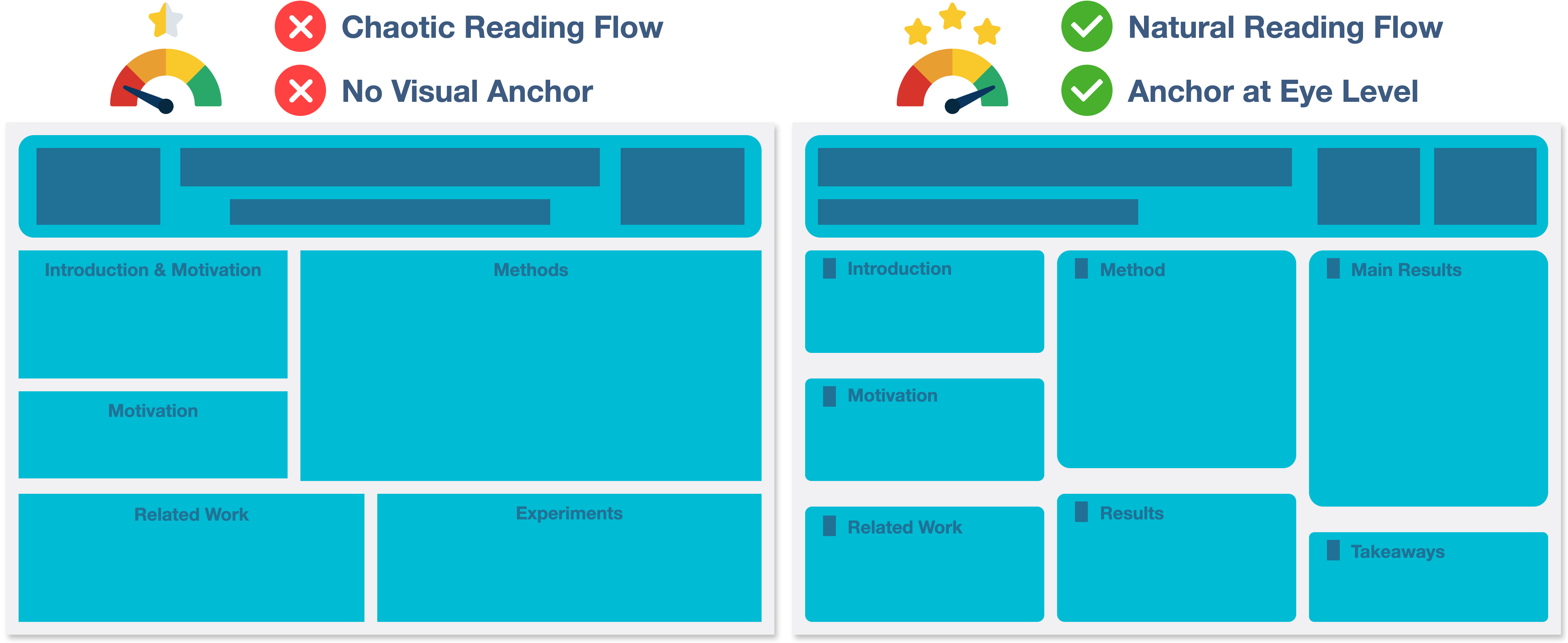

Three-column and left-aligned layout structure

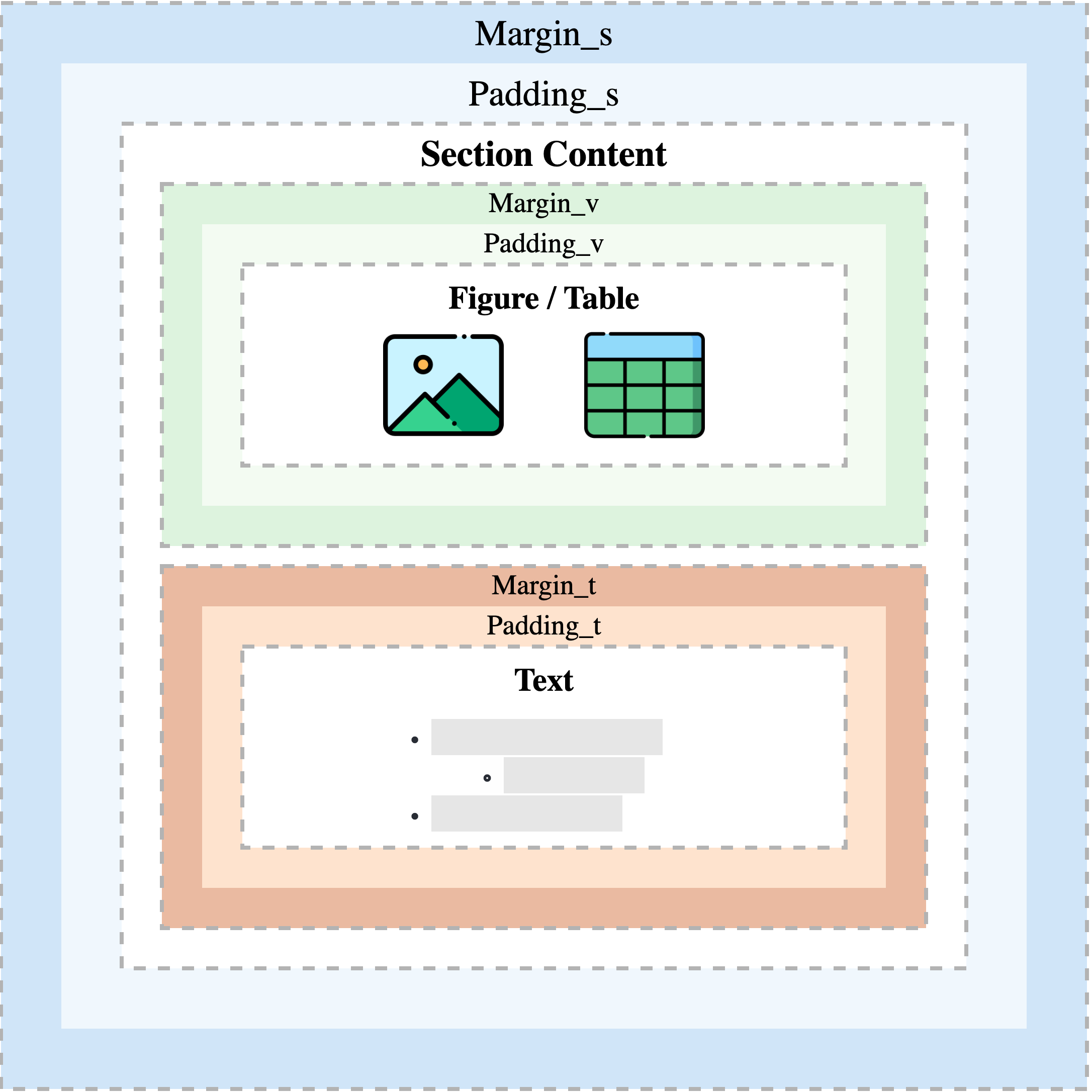

CSS-like box model within single section

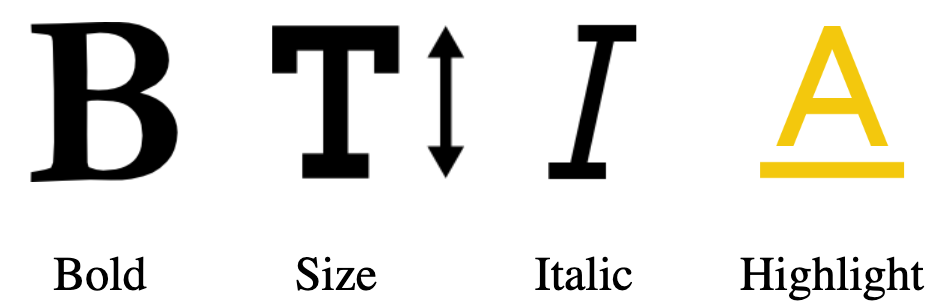

Different highlighting styles

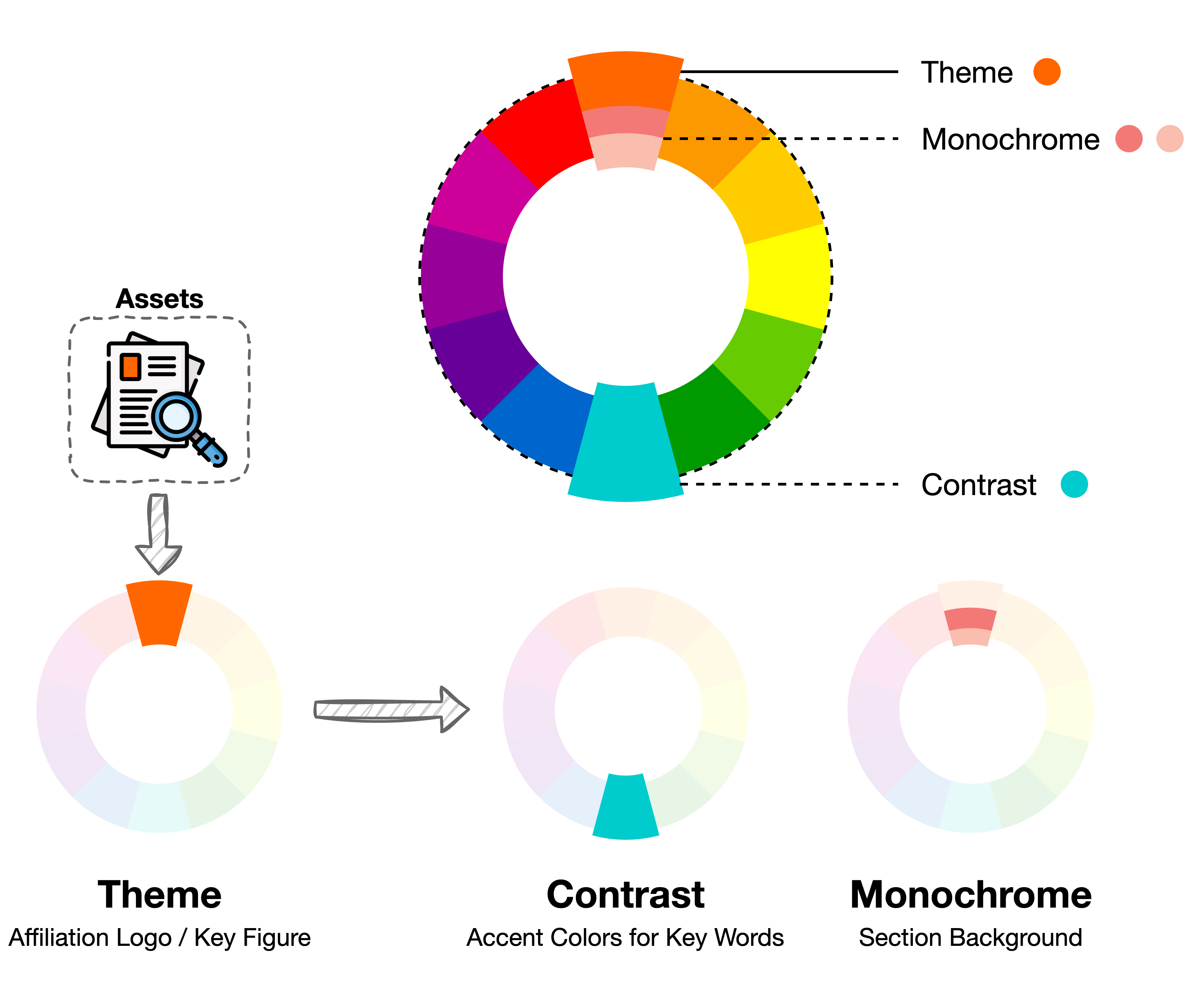

Harmonious color palette

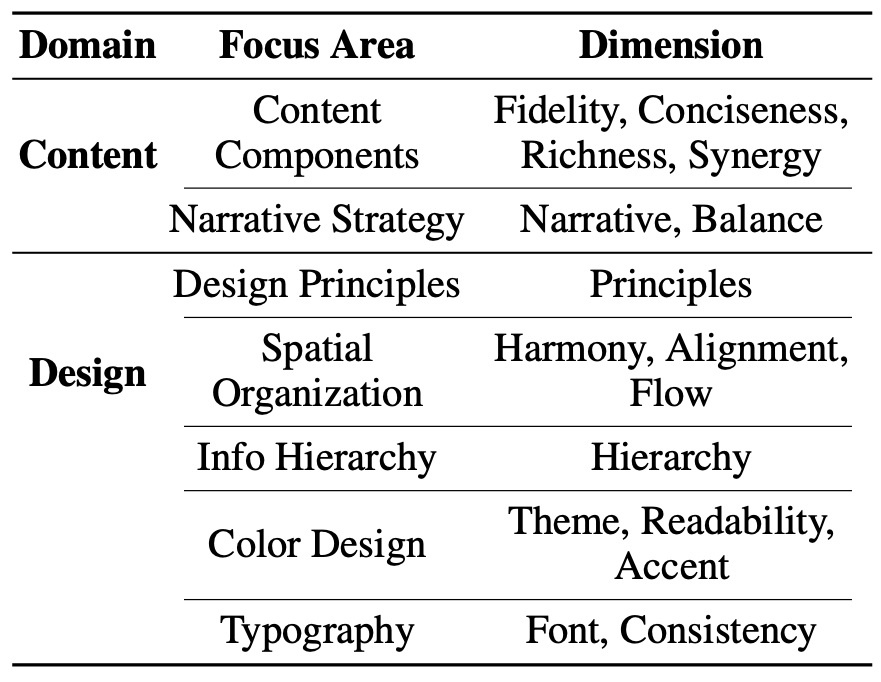

Evaluation Metrics

We evaluate the generated posters by using a Vision-Language Model (VLM) to serve as an expert judge.

Poster Content

This part verifies that the poster is an accurate, concise, and coherent narrative of the source paper, free from factual errors or overly dense text.

Poster Design

This part evaluates the poster's visual execution, i.e., the application of design principles (Alignment, Proximity, Repetition, Contrast), effective spatial organization, a clear information hierarchy, and the use of a limited and consistent color palette and a disciplined typographic system.

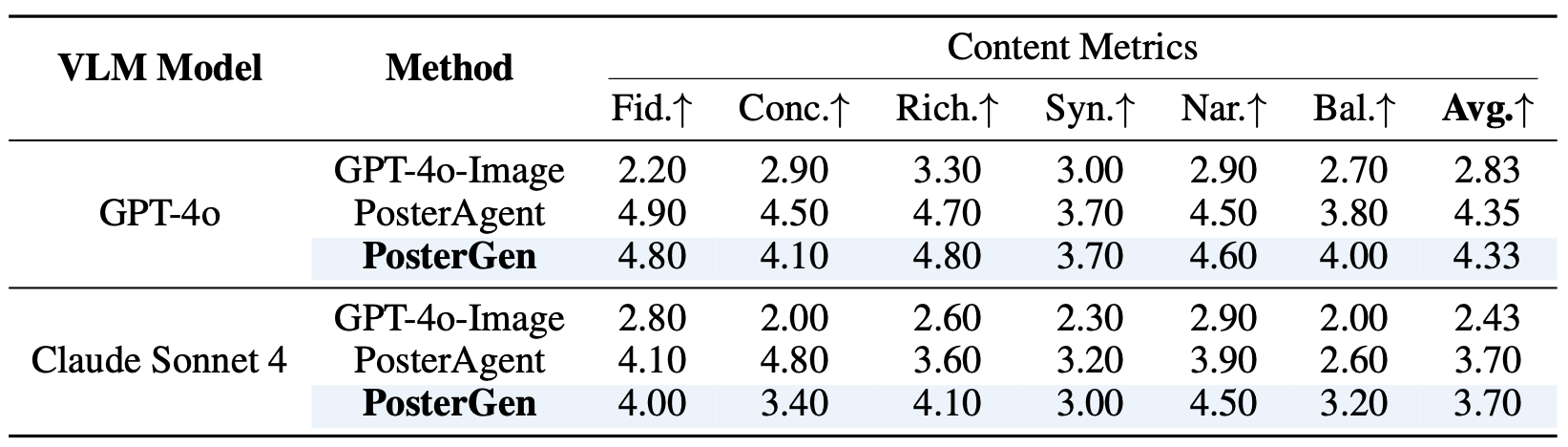

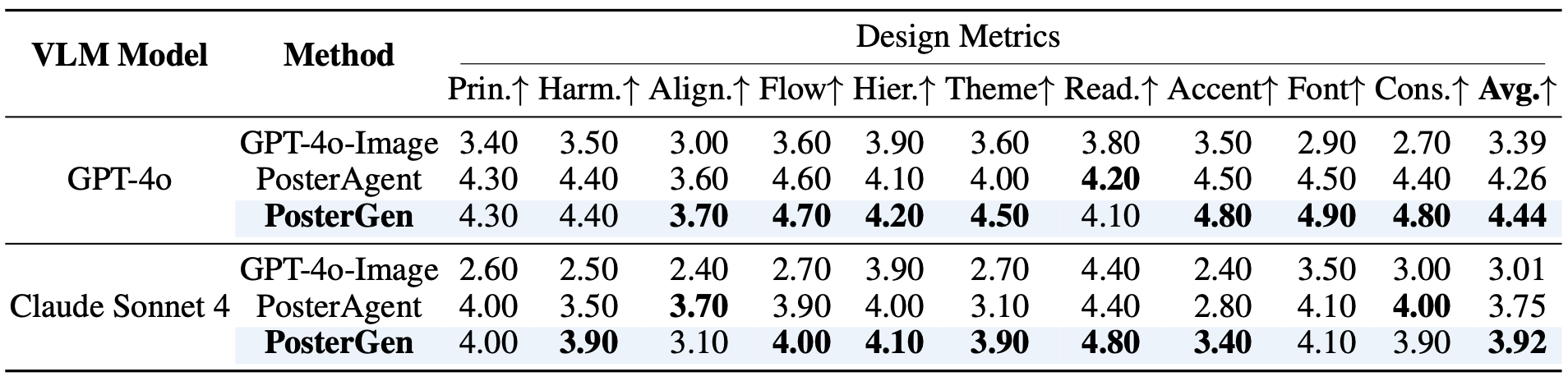

Quantitive Results

VLM-as-Judge results on content metrics across different poster generation methods, with scores averaged over 10 posters rated on a 1-5 scale.

VLM-as-Judge results on design metrics across different poster generation methods, with scores averaged over 10 posters rated on a 1-5 scale. The best scores for each design metric are bolded.

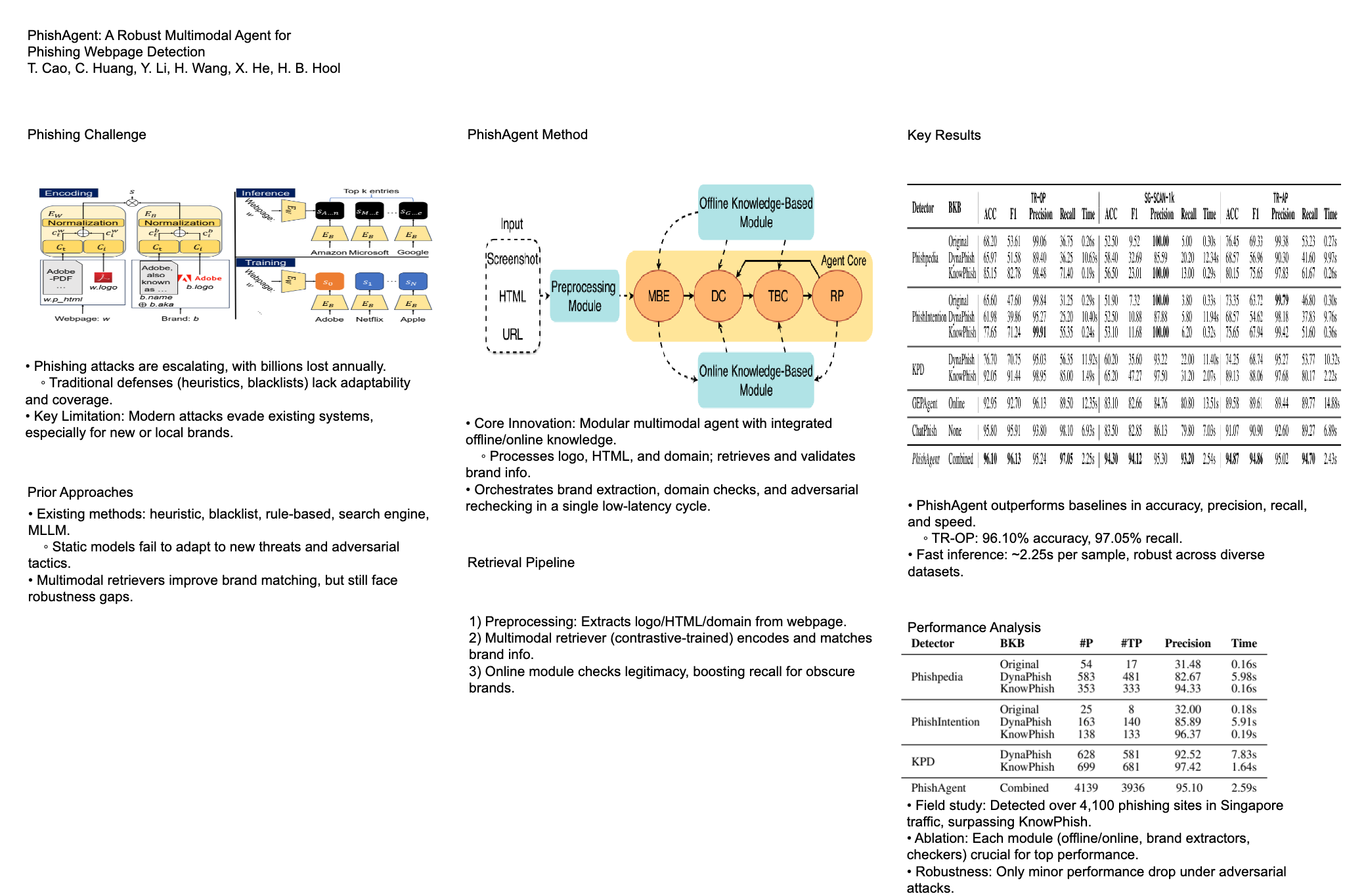

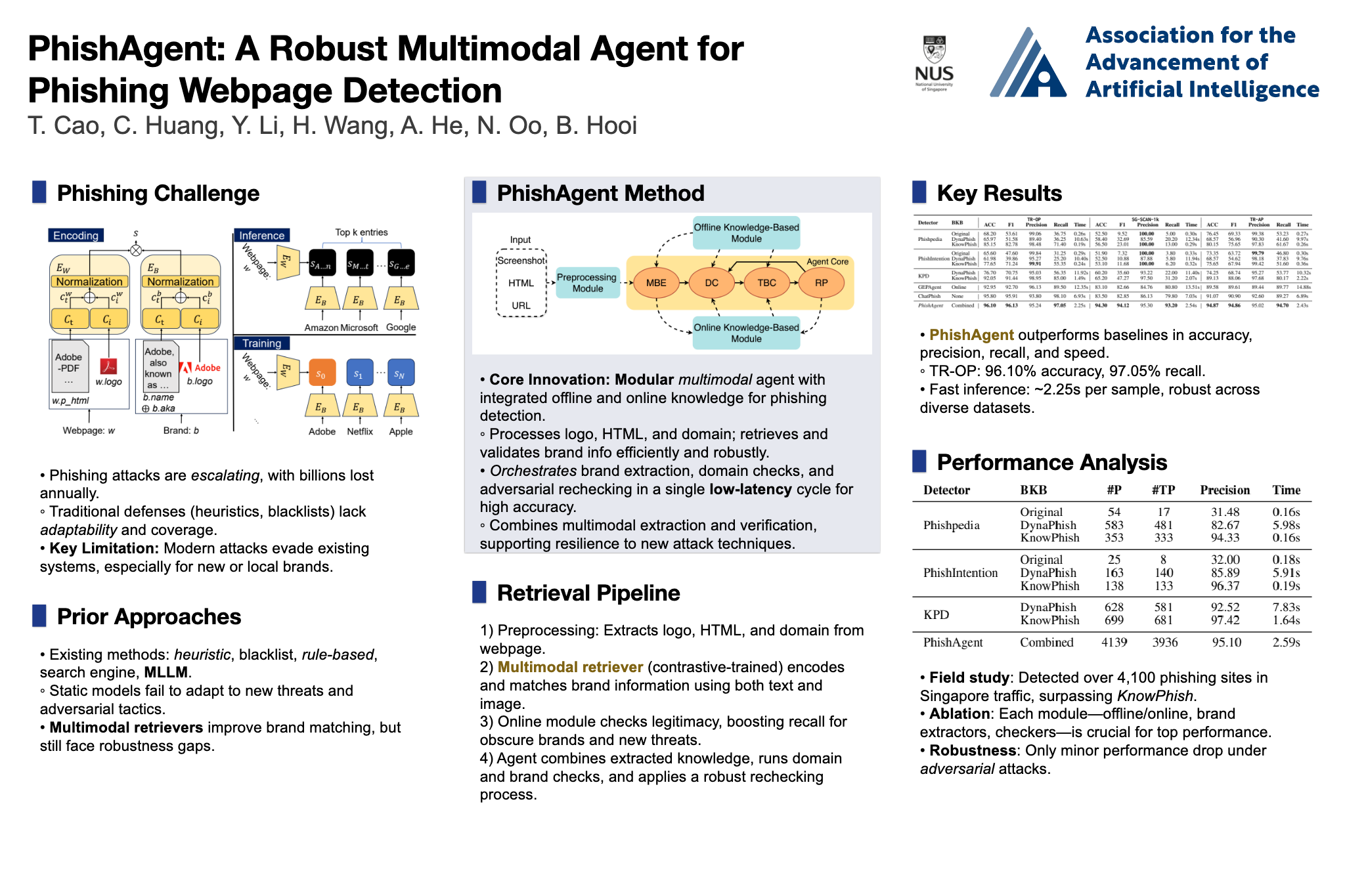

Ablation Study

We conduct an ablation study to isolate the contributions of the three core agents in our PosterGen pipeline: the Curator Agent, the Layout Agent, and the Stylist Agents, on the same paper (Cao et al. 2025).

(a) Curator Agent

(b) Curator Agent + Layout Agent

(c) Curator Agent + Layout Agent + Stylist Agents

BibTeX

@article{zhang2025postergen,

title={PosterGen: Aesthetic-Aware Paper-to-Poster Generation via Multi-Agent LLMs},

author={Zhilin Zhang and Xiang Zhang and Jiaqi Wei and Yiwei Xu and Chenyu You},

journal={arXiv:2508.17188},

year={2025}

}